Confiabilidad, precisión o reproducibilidad de las mediciones. Métodos de valoración, utilidad y aplicaciones en la práctica clínica

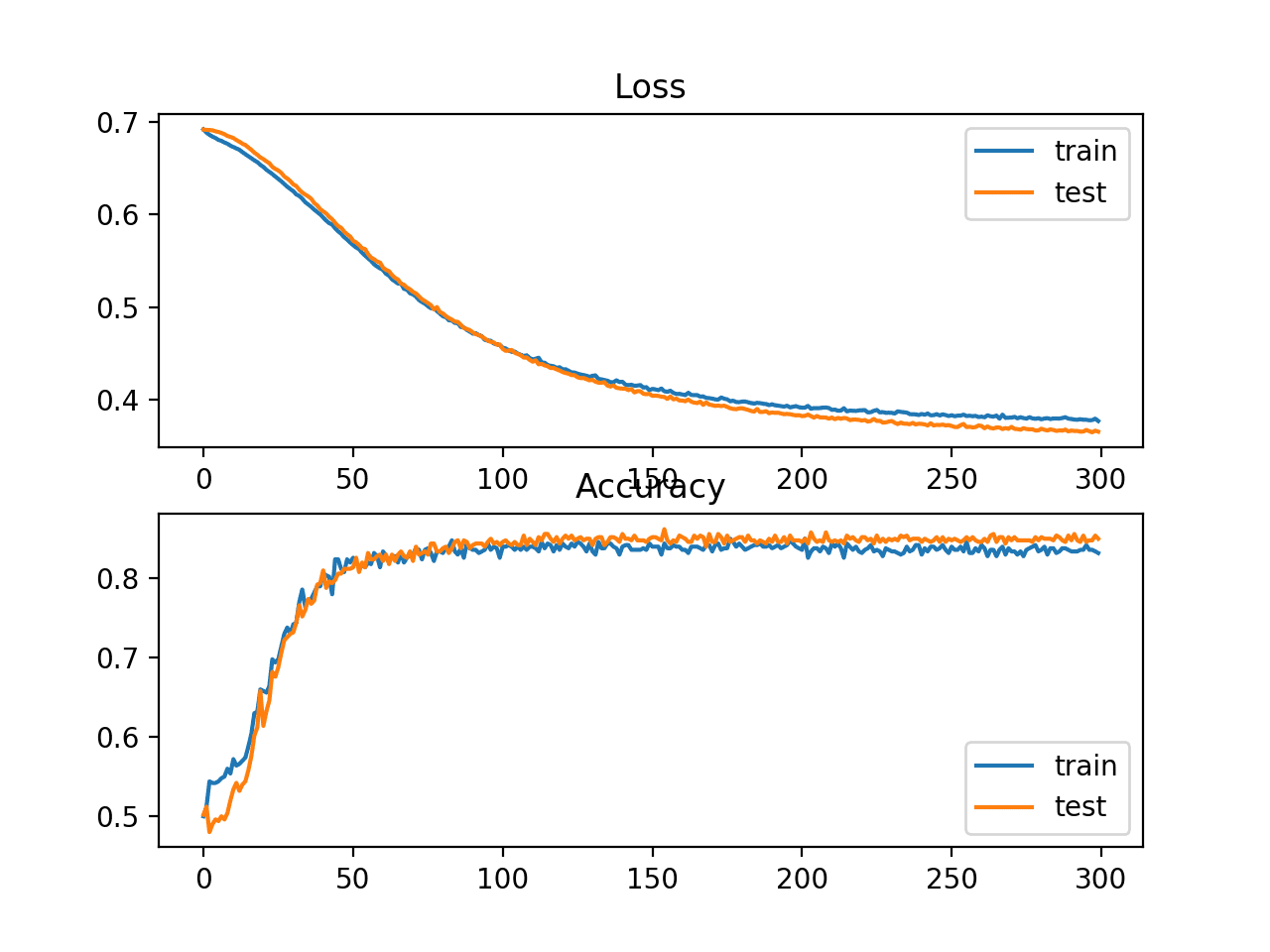

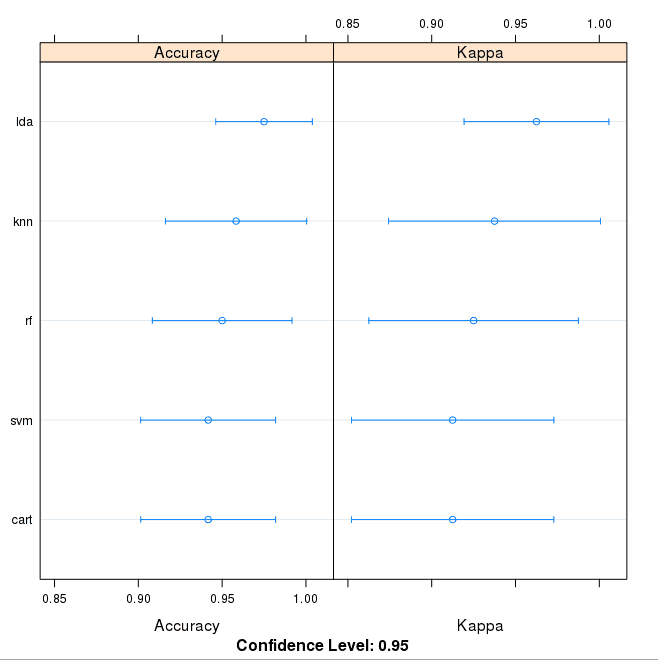

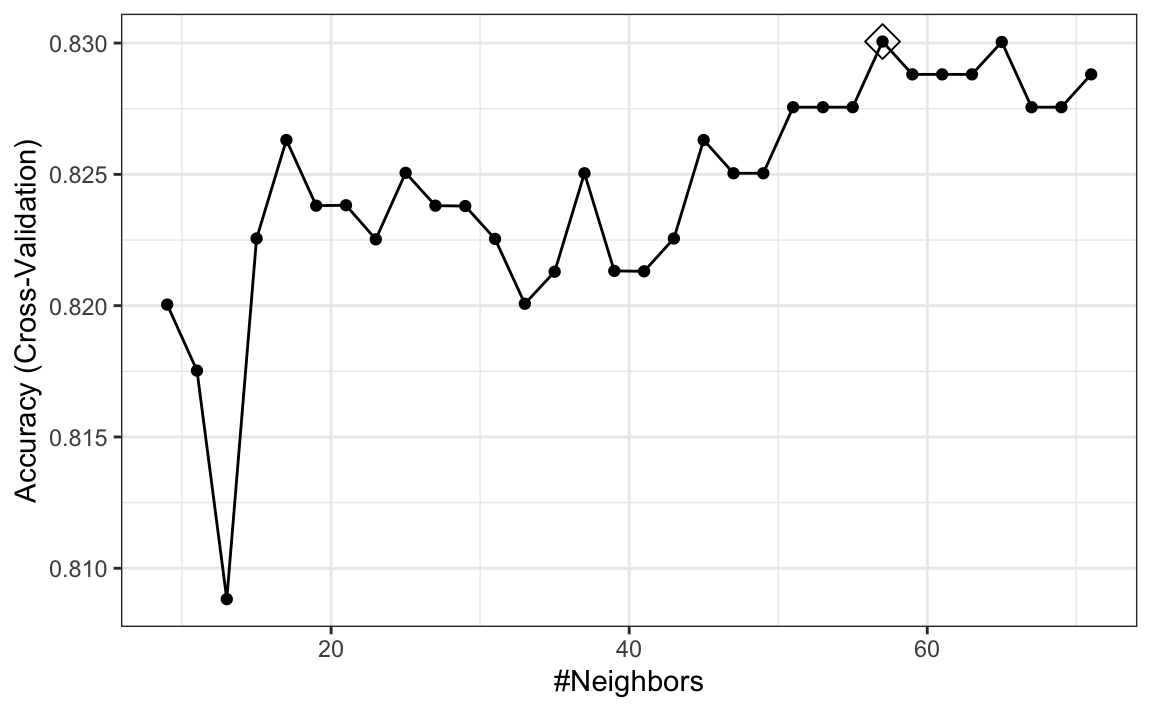

Comparison of overall accuracy and kappa coefficient using different... | Download Scientific Diagram

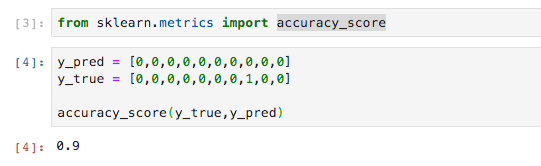

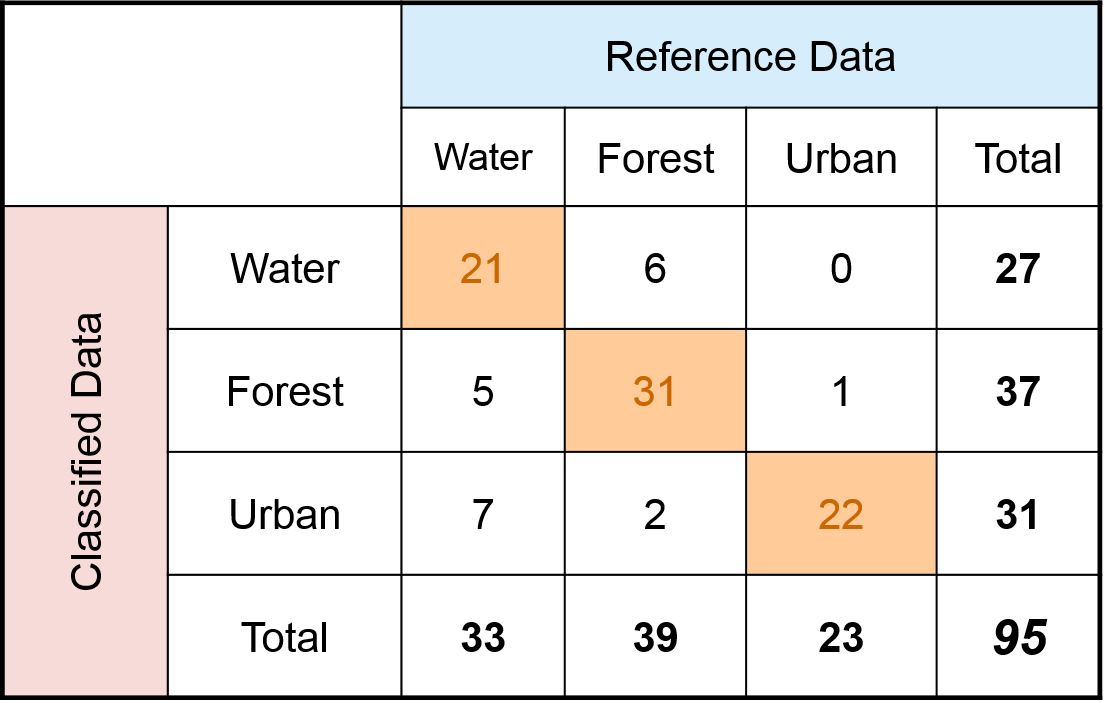

Cohen's Kappa: What it is, when to use it, and how to avoid its pitfalls | by Rosaria Silipo | Towards Data Science